搭建软件版本

- Docker:20.10.0

- k8s:1.23.6

初始操作

shell

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

# set static host mapping

cat >> /etc/hosts << EOF

10.1.72.58 master

10.1.72.12 node1

EOF

# 将桥接IPv4流量传递到iptables链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

yum install ntpdate -y

ntpdate time.windows.com

# pid

echo "kernel.pid_max=4194304" | tee -a /etc/sysctl.conf && sysctl -w kernel.pid_max=4194304 && sysctl -p

# limits

ulimit -u 1000000

sysctl -w kernel.pid_max=8388608shell

set -e

# 1. 关闭防火墙(Ubuntu 使用 ufw)

systemctl stop ufw

systemctl disable ufw

# 2. 关闭 SELinux(Ubuntu 默认没有 SELinux,可以跳过)

# 但如果你装了 SELinux,可以这样禁用(一般不需要)

# apt install selinux-utils -y

# setenforce 0

# sed -i 's/^SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

# 3. 关闭 swap

swapoff -a

sed -ri 's/^\s*(.+\s+)?swap\s+(\S+\s+){2}/#\0/' /etc/fstab

# 4. 设置 /etc/hosts 静态解析

cat >> /etc/hosts << EOF

172.16.4.121 master

172.16.4.122 node1

172.16.4.123 node3

EOF

# 5. 开启 iptables 处理桥接 IPv4 和 IPv6 流量

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

modprobe br_netfilter

sysctl --system

# 6. 时间同步(Ubuntu 推荐 systemd-timesyncd 或 chrony)

timedatectl set-timezone Asia/Shanghai

timedatectl set-ntp true

# 或者手动使用 ntpdate:

apt update && apt install ntpdate -y

ntpdate time.windows.com

# 7. 设置 PID 最大值

echo "kernel.pid_max=4194304" | tee -a /etc/sysctl.conf

sysctl -w kernel.pid_max=4194304

sysctl -p

# 8. 临时修改用户进程数限制(只对当前会话生效)

ulimit -u 1000000

# 永久修改 limits(推荐)

cat >> /etc/security/limits.conf << EOF

* soft nproc 1000000

* hard nproc 1000000

EOF安装基础软件

shell

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum clean expire-cache

yum makecacheshell

sudo yum install -y yum-utils

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce-20.10.0-3.el7 docker-ce-cli-20.10.0-3.el7 containerd.io

systemctl enable docker --nowjson

{

"exec-opts": ["native.cgroupdriver=systemd"]

}shell

systemctl daemon-reload

systemctl restart dockershell

yum install -y kubelet-1.23.6 kubeadm-1.23.6 kubectl-1.23.6

systemctl enable kubelet --nowshell

# ubuntu 24 没有20的docker,可以通过 dpkg -i 安装

# 添加 Kubernetes 阿里云 APT 源

cat <<EOF | tee /etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial main

EOF

# 添加 GPG 密钥

curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | gpg --dearmor | tee /usr/share/keyrings/kubernetes-archive-keyring.gpg >/dev/null

# 配置签名

sed -i 's|^deb |deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] |' /etc/apt/sources.list.d/kubernetes.list

# 更新软件包索引

apt update

apt install -y kubelet=1.23.6-00 kubeadm=1.23.6-00 kubectl=1.23.6-00

# 防止被升级

apt-mark hold kubelet kubeadm kubectl

systemctl enable kubelet --nowshell

# 写入配置文件

sudo tee /etc/docker/daemon.json > /dev/null <<EOF

{

"default-runtime": "nvidia",

"runtimes": {

"nvidia": {

"path": "nvidia-container-runtime",

"runtimeArgs": []

}

},

"exec-opts": [

"native.cgroupdriver=systemd"

],

"graph": "/iflytek/docker/",

"insecure-registries": [

"172.29.101.173:8888"

],

"log-driver": "json-file",

"log-opts": {

"max-file": "3",

"max-size": "200m"

}

}

EOF

# 重新加载 systemd 并重启 Docker 服务

sudo systemctl daemon-reexec

sudo systemctl restart docker

# 验证配置是否生效

docker info | grep -i cgroup初始化Master

yaml

cat <<EOF > kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta3

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

root-dir: "/iflytek/kubelet"

localAPIEndpoint:

advertiseAddress: 172.29.101.173

bindPort: 6443

---

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

kubernetesVersion: v1.23.6

imageRepository: registry.aliyuncs.com/google_containers

networking:

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

imageGCHighThresholdPercent: 75

imageGCLowThresholdPercent: 65

EOFshell

kubeadm init --config kubeadm-config.yaml

shell

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configshell

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane,master 3m28s v1.23.6Node加入

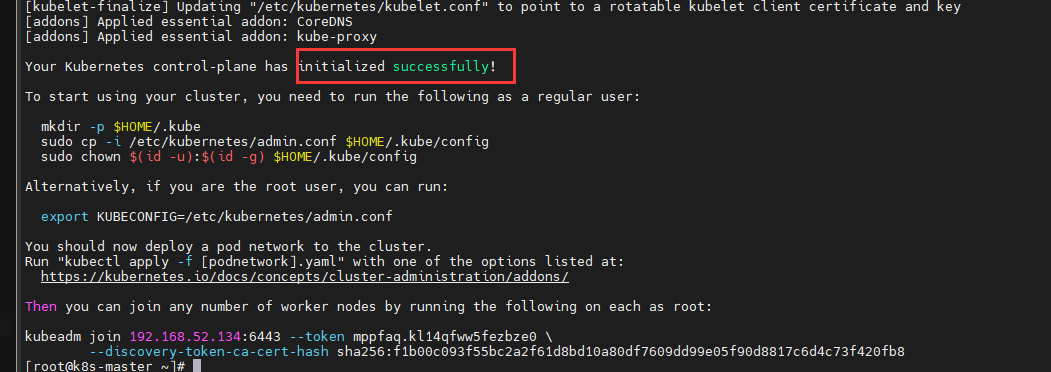

node加入需要使用token,安装完成后会输出在终端,如果不小心清除可以用以下下方式查看

shell

# 创建token

kubeadm token create

# 如果token没有过期,可以直接list查看token

kubeadm token list

kubeadm token create --print-join-commandshell

kubeadm join 192.168.52.134:6443 --token mppfaq.kl14qfww5fezbze0 \

--discovery-token-ca-cert-hash sha256:f1b00c093f55bc2a2f61d8bd10a80df7609dd99e05f90d8817c6d4c73f420fb8

# --discovery-token-ca-cert-hash 获取

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \

openssl dgst --sha256 -hex | sed 's/^.* //'shell

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane,master 20m v1.23.6

k8s-node1 NotReady <none> 50s v1.23.6

k8s-node2 NotReady <none> 4s v1.23.6初始化软件

shell

kubeadm config images pull --kubernetes-version=v1.23.6 --image-repository k8s-gcr.m.daocloud.io

kubeadm config images pull --kubernetes-version=v1.23.6 --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers部署CNI网络插件

Master:

shell

curl https://docs.projectcalico.org/manifests/calico.yaml -O下载完成后需要替换配置

shell

# 上方初始化的IP

CALICO_IPV4POOL_CIDRshell

kubectl apply -f calico.yaml构建过程中可能会因为网络问题慢,可以通过一下命令去观察状态

shell

kubectl get po -n kube-system

kubectl get no -n kube-system

kubectl describe po-xxxx -n kube-sysetem

# 手动pull image

docker pull calico/kube-controllers:v3.25.0

docker pull calico/cni:v3.25.0

docker pull calico/node:v3.25.0测试

shell

kubectl create deployment nginx --image=nginxshell

kubectl expose deployment nginx --port=80 --type=NodePortshell

kubectl get pod,svc节点

shell

scp /etc/kubernetes/admin.conf root@k8s-node1:/etc/kubernetesshell

echo "export KUBECONFIG=/etc/kubernetes/admin.conf">> ~/.bash_profile

source ~/.bash_profile单机部署需要去除主节点污点 taint

shell

kubectl taint nodes --all node-role.kubernetes.io/master-

# node/master untaintedToken

yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-admin

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: dashboard-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.ioshell

kubectl get secret -n kube-system|grep admin

# dashboard-admin-token-xxxx kubernetes.io/service-account-token 3 2m

kubectl describe secret dashboard-admin-token-xxxx -n kube-systemyaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ns-admin

namespace: my-namespace

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ns-admin

namespace: my-namespace

rules:

- apiGroups: [""] # 核心资源(如 pods, services)

resources: ["pods", "services", "configmaps", "secrets"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

- apiGroups: ["apps"] # deployment 等

resources: ["deployments", "replicasets", "statefulsets"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

- apiGroups: ["batch"] # job, cronjob

resources: ["jobs", "cronjobs"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ns-admin

namespace: my-namespace

subjects:

- kind: ServiceAccount

name: ns-admin

namespace: my-namespace

roleRef:

kind: Role

name: ns-admin

apiGroup: rbac.authorization.k8s.io预留空间

K8s集群搭建完成后需要再每个节点保留相应的资源,用于基础环境运行,防止集群雪崩

yaml

# 在每个节点的 中指定不同的值

systemReserved:

cpu: "1" # 针对节点A

memory: "1Gi"

ephemeral-storage: "50Gi"

kubeReserved:

cpu: "1"

memory: "1Gi"

ephemeral-storage: "5Gi"

evictionHard:

memory.available: "200Mi"

nodefs.available: "10%"

nodefs.inodesFree: "5%"

imagefs.available: "15%"磁盘管理

local-path

shell

kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/v0.0.29/deploy/local-path-storage.yamlshell

cat > local-path-v2.yaml << EOF

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{},"name":"local-path-v2"},"parameters":{"blockCleanerCommand":"/scripts/delete-block.sh","blockCleanerCommandRetries":"5","blockCleanerCommandTimeout":"1m","mountPath":"/iflytek/local-path-v2"},"provisioner":"rancher.io/local-path"}

name: local-path-v2

parameters:

blockCleanerCommand: /scripts/delete-block.sh

blockCleanerCommandRetries: "5"

blockCleanerCommandTimeout: 1m

mountPath: /iflytek/local-path-v2

provisioner: rancher.io/local-path

reclaimPolicy: Delete

volumeBindingMode: Immediate

EOF

cat > local-path.yaml << EOF

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{},"name":"local-path"},"provisioner":"rancher.io/local-path","reclaimPolicy":"Delete","volumeBindingMode":"WaitForFirstConsumer"}

name: local-path

provisioner: rancher.io/local-path

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

EOF临时卷

nginx

featureGates:

LocalStorageCapacityIsolation: trueshell

sudo systemctl daemon-reload # 重新加载 systemd 配置

sudo systemctl restart kubelet # 重启 kubelet 服务yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx

namespace: default

spec:

selector:

matchLabels:

run: nginx

template:

metadata:

labels:

run: nginx

spec:

containers:

- image: nginx

name: nginx

resources:

limits:

ephemeral-storage: 2Gi

requests:

ephemeral-storage: 2Gishell

dd if=/dev/zero of=/test bs=4096 count=1024000然后应该会退出,pod会重建

配置

端口范围修改

shell

vim /etc/kubernetes/manifests/kube-apiserver.yaml

# 添加

- --service-node-port-range=20000-22767

# 在 kube-system 下查找API,然后删除,然后通过describe查询配置是否生效迁移数据盘

shell

systemctl stop kubeletshell

mkdir -p /iflytek/kubelet

cp -rf /var/lib/kubelet/pods /iflytek/kubelet/

cp -rf /var/lib/kubelet/pod-resources /iflytek/kubelet/

mv /var/lib/kubelet/pods{,.old}

mv /var/lib/kubelet/pod-resources{,.old}shell

KUBELET_EXTRA_ARGS="--root-dir=/data/kubelet"shell

systemctl daemon-reload && systemctl restart kubelet

systemctl status kubelet